Bad Actors, Email Addresses .... and the Dot

from greg

“It’s all about being a part of something in the community, socializing with people who share interests and coming together to help improve the world we live in.” – Zach Braff

In any successful network, bad actors will emerge with the simple goal of achieving some result that is in the interests of themselves or those that have hired them. These bad actors could be code, could be individual users on a VPN coming from a location with no recourse to investigate, or an army of humans clicking on behalf of their country. The motivations ar3e endless and we are certain to hear lots about mis-information over the next few months with the elections around the corner.. Whole encyclopedia's could be written on the topic, each attack vector has similarities to other social networks with slight deviations. Most importantly, it is a cat-n-mouse game ... once you are able to minimize the mechanisms by which someone is abusing your system, they will figure out ways around it. If they cannot and go away, someone else eventually will. The previous ones will come back in a year to see if you left your guard down.

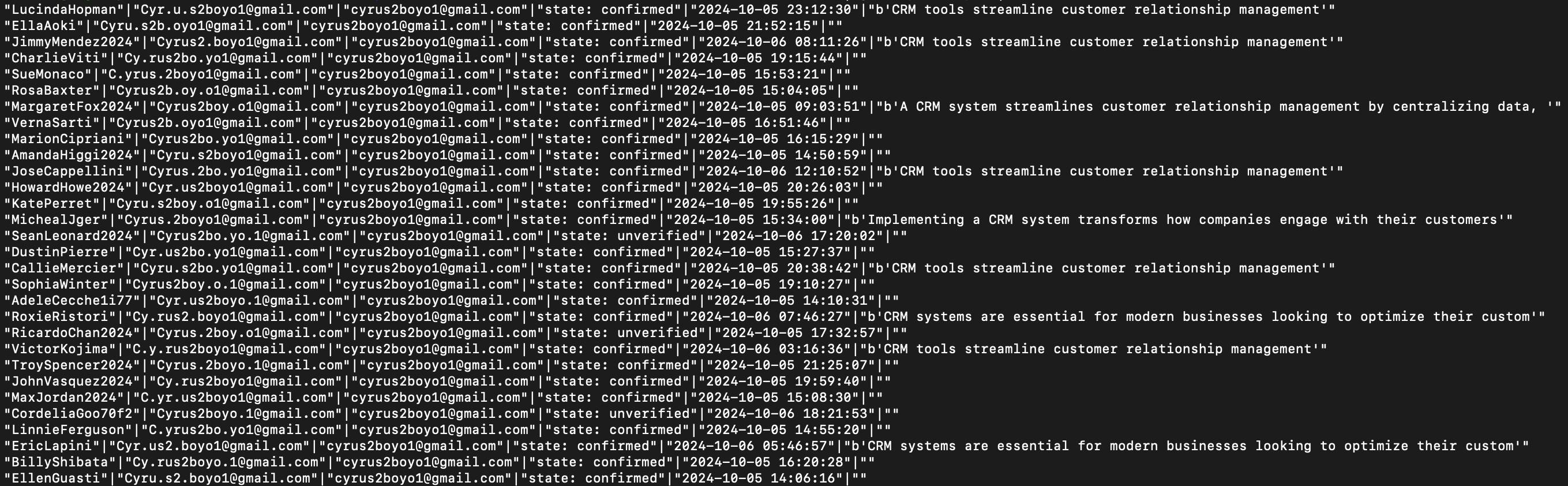

Check out this output from analysis of new accounts created over the last few days

The second column shows emails such as Cyr.u.s2boyo1@gmail.com and Cy.rus2boyo1@gmail.com .... gmail users can basically add dots to their email address or append a plus sign and more letters/numbers after the email address to create what looks like a unique email address, but one which not actually unique and, in this example, always sends back to the canonical email address cyrus2boyo1@gmail.com. There are legitimate use cases for doing this out in the real world (for example, I might create a threads account with the email address rockhunters08+threads@gmail.com or maybe your testing team wants to create 100's of emails to test something but all route to the same account). Nontheless, each social network has to decide where and when this is allowed and come up with rules to prevent the creation in the first place, if possible, else detect the bad actor before they impose harm on users or your business, and disable them.

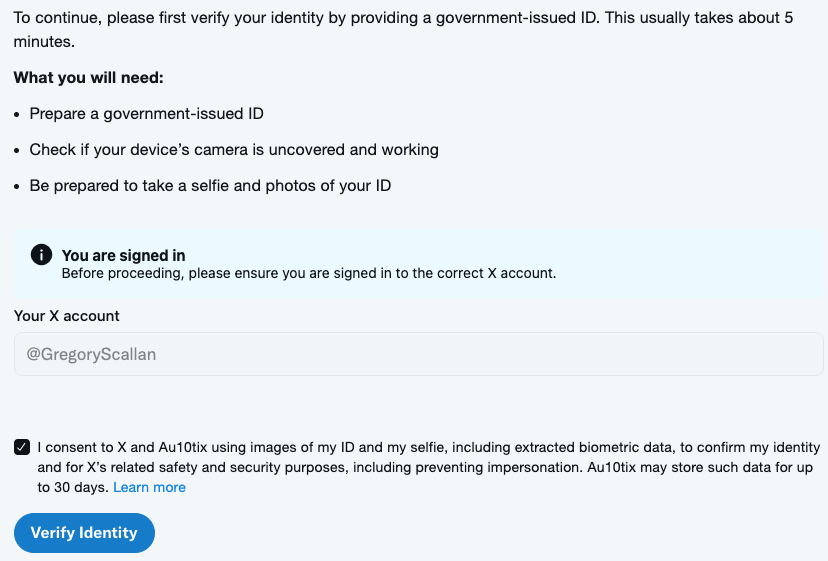

In this particular case, the fact that the user is verifying theses accounts, that all of them were created quickly one after the other, and many already have bios that are the same, implies they likely are planning to follow themselves to give large follower numbers (and later, possible, like or reflip flips from each other) with the goal of gaming our recommendations algorithms. Who knows, really. On Flipboard, this will never work because we, in general, have an allow list approach to recommendations, so unless our editorial staff (a.k.s. carbon based life forms) have reviewed your account or domain of content, it won't make it into other people's For You feeds). This is one of the reasons why we are SLOWLY federating Flipboard accounts: we do not want this gamification to spill over into the fediverse.

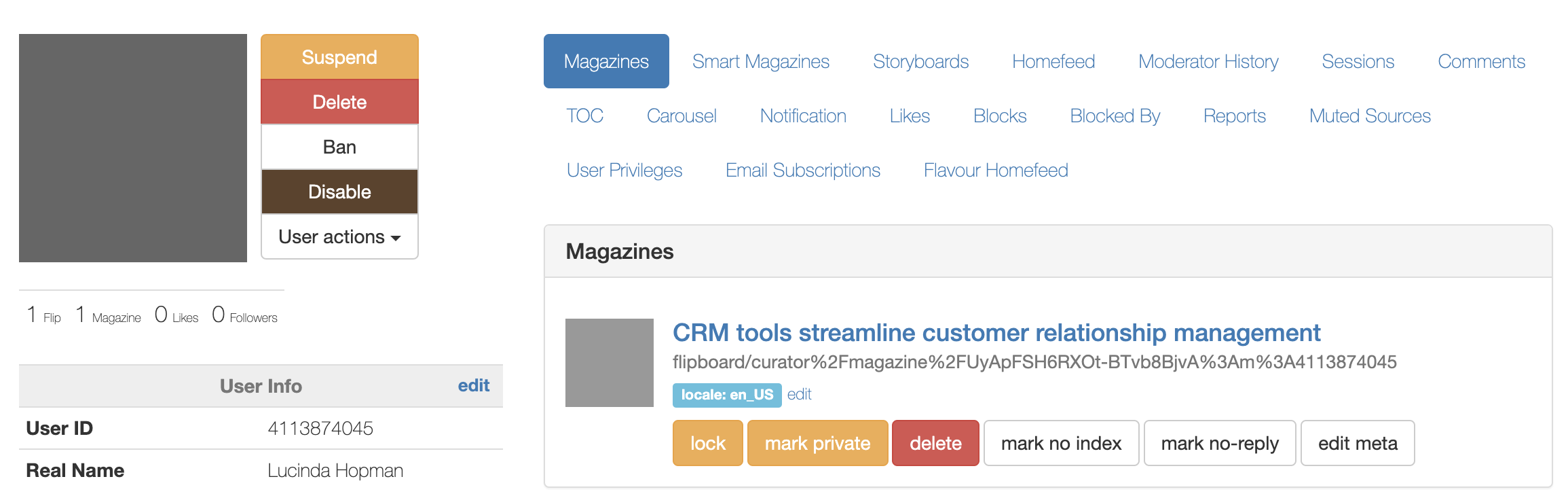

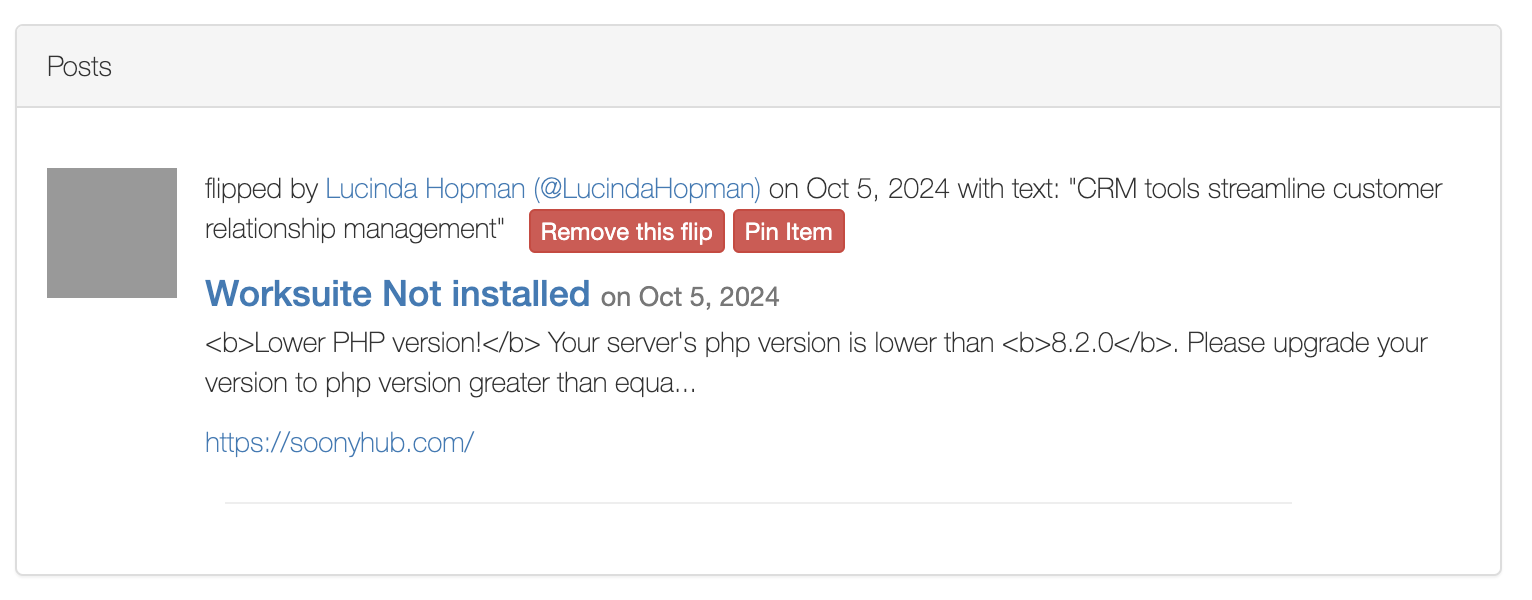

There is more analysis we can do if we were not sure if this is a bad actor. For example, on the images below, you can see they already created a magazine and flipped 1 article into it. That .... pattern ... is something we can write a chapter on and is specific to Flipboard, though I suppose the similarity to other social networks is whatever the write action you can take on that network (.e.g Post).

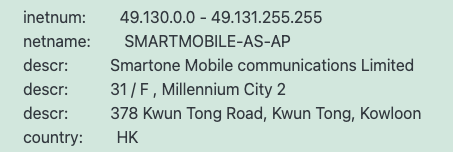

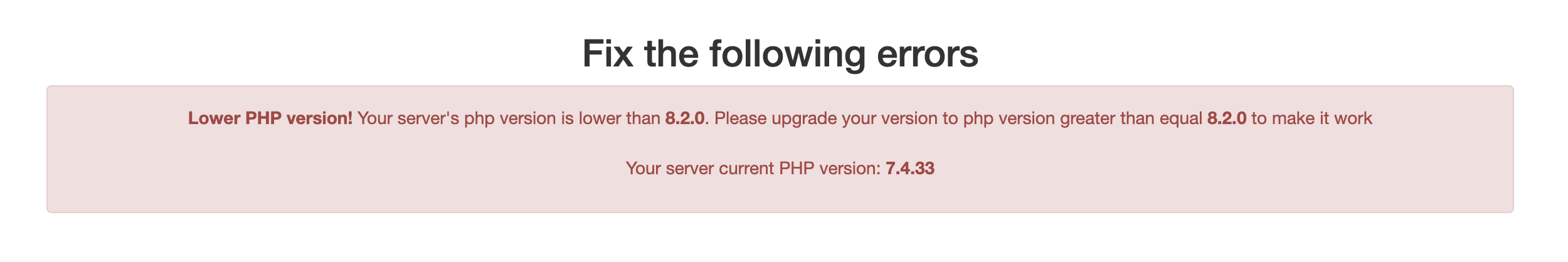

You could then do curl and go to the website of where the article is located and you will get something like the image below

You could take this analysis further and look at who owns the domain, when was it registered, etc... it goes on and on. For now, it is clear this person is not a legitimate user and deserves to be disabled. We'll run this check over accounts daily as well as over longer periods of time. I've seen cases where a bad actor will create 1 account per email address a day over many months and, worse, do that for many email addresses via a VPN with changing ip addresses. Always fun.

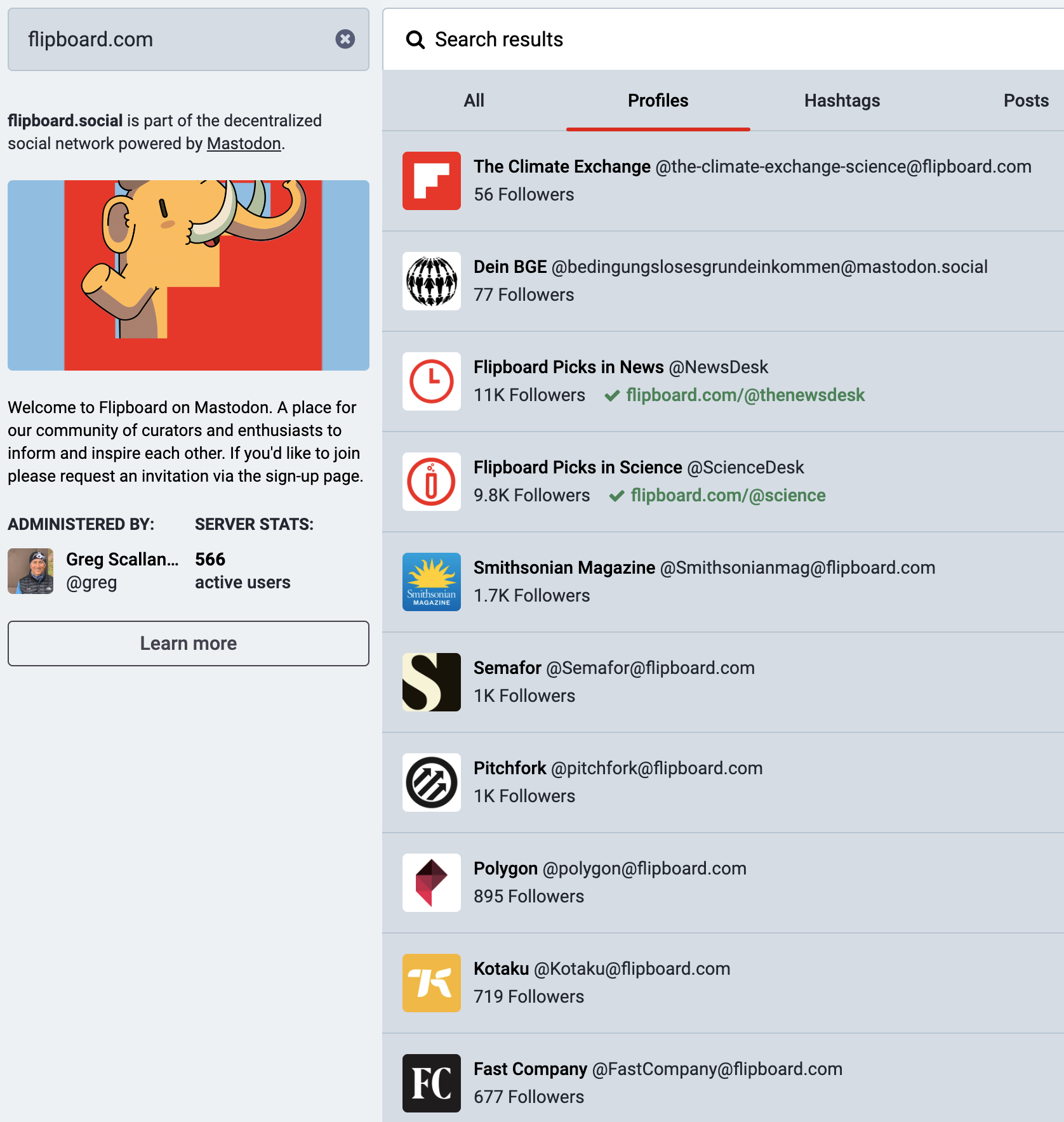

In the fediverse, this becomes more complicated because this user could do this same tactic across 10's of thousands of instances where this kind of analysis is not readily available

Thoughts? I'd love to know what you think!

#moderation #trust #safety